This lecture deals with maximum likelihood estimation of the parameters of the normal distribution.

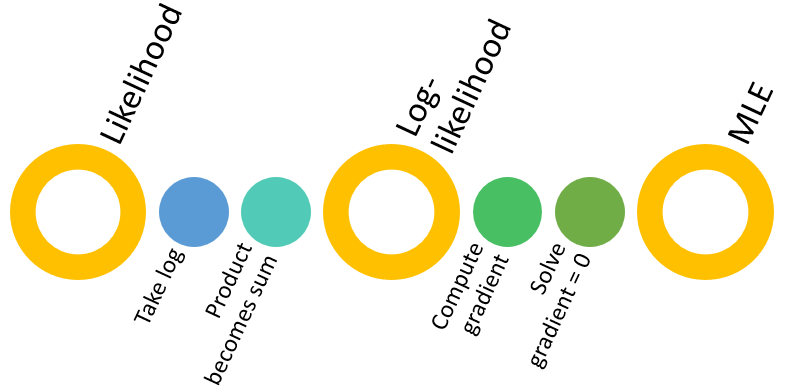

Before continuing, you might want to revise the basics of maximum likelihood estimation (MLE).

Our sample is made up of the first

terms of an IID sequence

of normal random variables having mean

and variance

.

The probability density

function of a generic term of the sequence

is

The mean

and the variance

are the two parameters that need to be estimated.

The likelihood function

is![[eq3]](/images/normal-distribution-maximum-likelihood__8.png)

Given the assumption that the observations

from the sample are IID, the likelihood function can be written

as![[eq4]](/images/normal-distribution-maximum-likelihood__9.png)

The log-likelihood function is

![[eq5]](/images/normal-distribution-maximum-likelihood__10.png)

By taking the natural logarithm of the

likelihood function, we

get![[eq6]](/images/normal-distribution-maximum-likelihood__11.png)

The maximum likelihood estimators of the mean and the variance

are

We need to solve the following maximization

problem

The

first order conditions for a maximum are

The

partial derivative of the log-likelihood with respect to the mean is

which

is equal to zero only

if

Therefore,

the first of the two first-order conditions implies

The

partial derivative of the log-likelihood with respect to the variance is

which,

if we rule out

,

is equal to zero only

if

Thus,

the system of first order conditions is solved

by

Thus, the estimator

is equal to the sample mean and the

estimator

is equal to the unadjusted

sample variance.

The

vectoris

asymptotically normal with asymptotic mean equal

to

and

asymptotic covariance matrix equal

to

![[eq19]](/images/normal-distribution-maximum-likelihood__26.png)

The first entry of the score vector

is

The

second entry of the score vector

is

In

order to compute the Hessian

![[eq23]](/images/normal-distribution-maximum-likelihood__30.png) we

need to compute all second order partial derivatives. We

have

we

need to compute all second order partial derivatives. We

haveand

Finally,

which,

as you might want to check, is also equal to the other cross-partial

derivative

.

Therefore, the Hessian

is

By

the information equality, we have

that

![[eq29]](/images/normal-distribution-maximum-likelihood__36.png) As

a consequence, the asymptotic covariance matrix

is

As

a consequence, the asymptotic covariance matrix

is![[eq30]](/images/normal-distribution-maximum-likelihood__37.png)

In other words, the distribution of the vector

can

be approximated by a multivariate normal

distribution with mean

and

covariance

matrix

StatLect has several pages that contain detailed derivations of MLEs. Learn how to find the estimators of the parameters of the following distributions and models.

| Type | Solution | |

|---|---|---|

| Exponential distribution | Univariate distribution | Analytical |

| Poisson distribution | Univariate distribution | Analytical |

| T distribution | Univariate distribution | Numerical |

| Multivariate normal distribution | Multivariate distribution | Analytical |

| Gaussian mixture | Mixture of distributions | Numerical (EM) |

| Normal linear regression model | Regression model | Analytical |

| Logistic classification model | Classification model | Numerical |

| Probit classification model | Classification model | Numerical |

Please cite as:

Taboga, Marco (2021). "Normal distribution - Maximum Likelihood Estimation", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/fundamentals-of-statistics/normal-distribution-maximum-likelihood.

Most of the learning materials found on this website are now available in a traditional textbook format.