Markov's inequality is a probabilistic inequality. It provides an upper bound to the probability that the realization of a random variable exceeds a given threshold.

The proposition below formally states the inequality.

Proposition

Let

be an integrable random

variable defined on a sample

space

.

Let

for all

(i.e.,

is a positive random variable). Let

(i.e.,

is a strictly positive real number). Then, the following inequality, called

Markov's inequality,

holds:

Reading and understanding the proof of Markov's inequality is highly recommended because it is an interesting application of many elementary properties of the expected value.

First note

thatwhere

is the indicator of the event

and

is the indicator of the event

.

As a consequence, we can

write

![[eq9]](/images/Markov-inequality__14.png) Now,

note that

Now,

note that

is a positive random variable and that the

expected value of a positive random

variable is

positive:

Therefore,

![]() Now,

note that the random variable

Now,

note that the random variable

is smaller than the random variable

for any

:

because,

trivially,

is always smaller than

when the indicator

is not zero. Thus, by an elementary property of the

expected value, we have

that

![]() Furthermore,

by using the linearity of the expected

value and the fact that the expected value

of an indicator is equal to the probability of the event it indicates, we

obtain

Furthermore,

by using the linearity of the expected

value and the fact that the expected value

of an indicator is equal to the probability of the event it indicates, we

obtain![]() The

above inequalities can be put

together:

The

above inequalities can be put

together:![[eq19]](/images/Markov-inequality__27.png) Finally,

since

Finally,

since

is strictly positive we can divide both sides of the right-hand inequality to

obtain Markov's

inequality:

This property also holds when

almost surely (in other words, there

exists a zero-probability event

such that

).

Suppose that an individual is extracted at random from a population of individuals having an average yearly income of $40,000.

What is the probability that the extracted individual's income is greater than $200,000?

In the absence of more information about the distribution of income, we can

use Markov's inequality to calculate an upper bound to this

probability:![[eq22]](/images/Markov-inequality__33.png) Therefore,

the probability of extracting an individual having an income greater than

$200,000 is less than

Therefore,

the probability of extracting an individual having an income greater than

$200,000 is less than

.

Markov's inequality has several applications in probability and statistics.

For example, it is used:

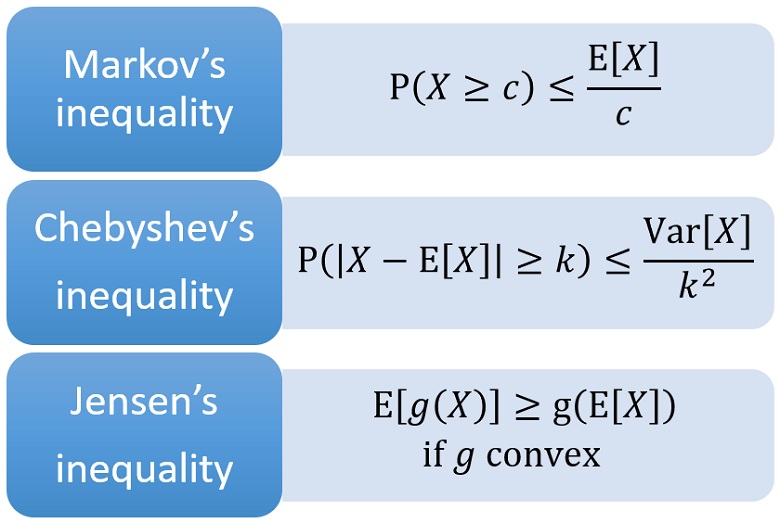

to prove Chebyshev's inequality;

in the proof that mean square convergence implies convergence in probability;

to derive upper bounds on tail probabilities (Exercise 2 below).

Below you can find some exercises with explained solutions.

Let

be a positive random variable whose expected value

is

Find a lower bound to the

probability

First of all, we need to use the formula

for the probability of a

complement:Now,

we can use Markov's

inequality:

![[eq26]](/images/Markov-inequality__39.png) Multiplying

both sides of the inequality by

Multiplying

both sides of the inequality by

,

we

obtain

Adding

to both sides of the inequality, we obtain

![]() Thus,

the lower bound

is

Thus,

the lower bound

is

Let

be a random variable such that the expected value

exists

and is finite.

Use the latter expected value to derive an upper bound to the tail

probabilitywhere

is a positive constant.

By Markov's inequality, we

have

If you like this page, StatLect has other pages on probabilistic inequalities:

Please cite as:

Taboga, Marco (2021). "Markov's inequality", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/fundamentals-of-probability/Markov-inequality.

Most of the learning materials found on this website are now available in a traditional textbook format.